A Python SDK for Code Mode

Through a websocket callback mechanism, agents can execute MCP and tools defined in any language within a single execution environment.

Code Mode delivers dramatic token efficiency by letting agents orchestrate multiple tools through generated code instead of sequential tool calls. The challenge: you need your Python ML models, data pipelines, and business logic accessible alongside MCP servers for GitHub, Stripe, and other APIs. Previously, this meant rewriting Python tools in TypeScript or giving up Code Mode's efficiency gains.

Port of Context released pctx-py, the first Code Mode architecture that supports any programming language while running 100% locally. Agents can now orchestrate Python tools and MCP servers together within unified Code Mode execution. Your existing Python codebase becomes available as typed functions alongside any MCP servers you've configured. The agent generates a single TypeScript code block that coordinates all operations—Python ML inference, database queries, and MCP API calls—in one efficient execution cycle.

This unlocks the full token efficiency of Code Mode without abandoning your Python ecosystem. Write tools in Python using familiar libraries (pandas, scikit-learn, asyncpg), register them with pctx, and agents can mix them freely with MCP servers. Single execution. No context serialization overhead. Type-safe orchestration across your entire tool set.

Python Tools + MCP Servers = Maximum Token Efficiency

MCP servers provide standardized access to external APIs—GitHub, Stripe, Slack, your internal services. They're designed for Code Mode, exposing typed function interfaces that agents can call from generated code. But your business logic, ML models, and data processing live in Python.

Before pctx-py, you faced an impossible tradeoff:

Option 1: Rewrite Python tools in TypeScript

- Duplicate your ML pipelines and data processing logic

- Maintain parallel implementations

- Abandon Python's ecosystem (pandas, scikit-learn, asyncpg)

Option 2: Use traditional tool calling for Python, Code Mode for MCP

- Python tools serialize to context window (expensive, slow)

- MCP servers use Code Mode (efficient)

- Split your agent workflow across two execution models

Option 3: Abandon Code Mode entirely

- Fall back to sequential tool calling for everything

- Serialize all tools into context

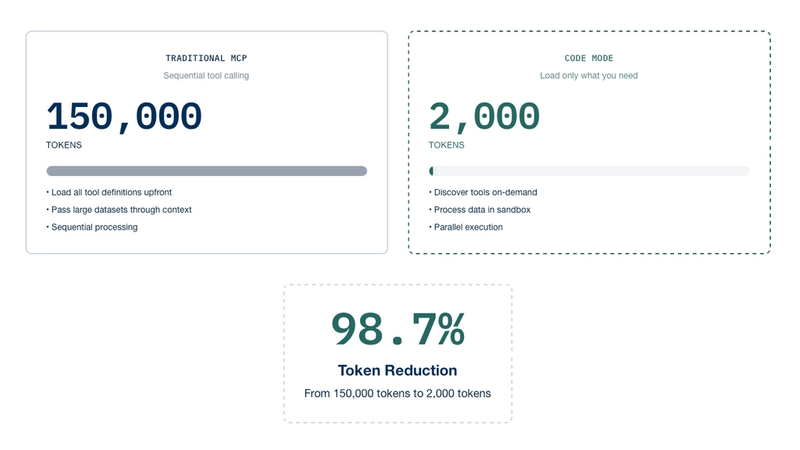

- Accept 75x higher token costs

pctx-py eliminates this tradeoff: Python tools and MCP servers, unified in Code Mode. Your Python code registers as typed functions. Agents see Python tools and MCP servers as a single, cohesive API. One code block orchestrates everything.

Architecture: Unified Python + MCP Execution

The pctx code server connects Python tools and MCP servers through a websocket callback mechanism:

MCP Servers: Connect via standard MCP protocol. Expose typed functions like github.create_issue() and stripe.get_customer().

Python Tools: Register via pctx-py websocket connection. Expose typed functions like ml.predict_churn() and data.query_metrics().

Agent's View: All tools appear as typed TypeScript functions in a single namespace. The agent generates code that freely mixes Python and MCP calls—ml.predict_churn() alongside github.create_issue()—without knowing which backend implements each function.

The code server routes each call to the appropriate runtime (Python process or MCP server), collects results, and returns them to the sandbox for continued execution. From the agent's perspective, everything is just typed functions available for orchestration.

How It Works

Execution Flow

The agent never knows where tools execute. Python tools, MCP servers, and future language SDKs all present the same interface to the TypeScript sandbox. The code server handles callback routing transparently.

Python SDK: First Multi-Language Client

pctx-py enables Python developers to define tools that agents can orchestrate through Code Mode:

Decorator-Based Tools

from pctx_client import tool

@tool(namespace="data")

async def analyze_sentiment(text: str) -> dict[str, float]:

"""Analyze sentiment of text using ML model"""

model = load_model("sentiment-analyzer")

scores = model.predict(text)

return {

"positive": scores[0],

"negative": scores[1],

"neutral": scores[2]

}

@tool(namespace="data")

async def fetch_database_metrics() -> list[dict]:

"""Query PostgreSQL for performance metrics"""

async with asyncpg.connect(DATABASE_URL) as conn:

return await conn.fetch(

"SELECT * FROM metrics WHERE timestamp > NOW() - INTERVAL '1 hour'"

)Class-Based Tools for Complex State

from pctx_client import AsyncTool

from pydantic import BaseModel, Field

class SearchQuery(BaseModel):

query: str

top_k: int = Field(default=10)

class SearchResult(BaseModel):

title: str

url: str

relevance: float

class VectorSearch(AsyncTool):

name: str = "vector_search"

namespace: str = "search"

description: str = "Semantic search over document embeddings"

input_schema: type[BaseModel] = SearchQuery

output_schema: type[list[SearchResult]] = list[SearchResult]

def __init__(self):

super().__init__()

self.index = load_faiss_index("documents.index")

self.encoder = load_encoder_model()

async def _ainvoke(self, query: str, top_k: int = 10) -> list[SearchResult]:

embedding = self.encoder.encode(query)

results = self.index.search(embedding, top_k)

return [

SearchResult(

title=doc.title,

url=doc.url,

relevance=score

)

for doc, score in results

]Agent Orchestration

Once your Python tools are registered, the agent receives them as typed functions alongside any MCP servers you've configured. The agent's language model generates TypeScript code that orchestrates these tools:

// The agent writes this TypeScript code

// It has no knowledge that some tools run in Python, others via MCP

// Execute three operations in parallel

const [sentiment, metrics, searchResults] = await Promise.all([

data.analyze_sentiment("Customer feedback from latest release"), // Python ML model

data.fetch_database_metrics(), // Python database query

search.vector_search({ query: "performance optimization", top_k: 5 }) // Python vector search

]);

// Use the results to make a decision

if (sentiment.negative > 0.7) {

// Call an MCP server tool

await github.create_issue({

repo: "product/api",

title: "Negative sentiment spike detected",

body: `Metrics: ${JSON.stringify(metrics)}\nRelated docs: ${searchResults.map(r => r.url).join(", ")}`

});

}This code executes in a single pass. No sequential tool calls. No context serialization overhead. The code server routes each function call to its implementation—Python process, MCP server, or future language runtime—and returns results to the sandbox for continued execution.

Framework Integration

from pctx_client import Pctx, tool

@tool

def get_weather(city: str) -> str:

"""Get weather for a city"""

return f"It's sunny in {city}"

# Initialize with your tools

p = Pctx(tools=[get_weather, analyze_sentiment, fetch_database_metrics])

# Connect to the code server

await p.connect()

# Use with LangChain

langchain_tools = p.langchain_tools()

# Or CrewAI, OpenAI, Pydantic AI

# crewai_tools = p.crewai_tools()

# openai_tools = p.openai_tools()

# pydantic_tools = p.pydantic_ai_tools()The Pctx class wraps your Python tools alongside the three core Code Mode functions that enable agents to discover, inspect, and execute code with access to your tools.

Why This Matters

1. MCP Ecosystem + Python Ecosystem = Complete Agent Tooling

The MCP ecosystem provides hundreds of pre-built servers for popular APIs: GitHub, Slack, Google Drive, Notion, Stripe. These give agents immediate access to external services. But your unique business logic lives in Python—ML models trained on your data, pipelines processing your formats, queries against your databases.

pctx-py lets you combine both: leverage the MCP ecosystem for standard integrations while adding Python tools for domain-specific operations. Agents orchestrate both in a single code block, eliminating the token overhead of traditional tool calling.

2. Keep Python Where It Belongs

Python's strength is data: pandas for transformation, scikit-learn for ML, asyncpg for databases, numpy for computation. These libraries represent decades of optimization and community investment. Rewriting them in TypeScript to fit Code Mode constraints means abandoning this ecosystem and duplicating battle-tested logic.

With pctx-py, Python tools stay in Python. Your ML inference runs with scikit-learn. Your data processing uses pandas. Your database queries use asyncpg. The code server handles orchestration and type safety while Python handles what it does best.

3. Token Efficiency Without Compromise

Traditional tool calling serializes every tool definition into context: 200+ tokens per tool, multiplied by your entire tool set, repeated on every request. With 50 tools, you spend 10,000 tokens before any real work begins.

Code Mode solves this by exposing tools as typed functions—~15 tokens per tool. But this only worked if you rewrote Python tools in TypeScript. pctx-py brings Python tools into Code Mode's efficient execution model. All your tools—Python and MCP—get Code Mode's token efficiency.

Getting Started

# Install the code server

npm install -g @portofcontext/pctx

# Start the code server

pctx start

# Install Python SDK

pip install pctx-client

# Or with framework support

pip install pctx-client[langchain]Define your tools and connect to your agent:

from pctx_client import Pctx, tool

@tool

def analyze_data(data: str) -> dict:

"""Analyze data using Python"""

return {"result": "analyzed"}

p = Pctx(tools=[analyze_data])

await p.connect()

# Use with any agent framework

tools = p.langchain_tools()Everything runs locally. No API keys required. Fully open source.

Real-World Example: Customer Risk Analysis

An agent orchestrates Python ML models alongside MCP servers to assess customer churn risk:

from pctx_client import tool

@tool(namespace="ml")

def predict_churn(customer_id: str) -> dict:

"""Predict customer churn probability"""

# Load features and run ML model

return {

"churn_probability": 0.85,

"factors": ["low engagement", "payment issues"]

}

@tool(namespace="data")

async def update_risk_score(customer_id: str, score: float) -> None:

"""Update customer risk score in database"""

async with asyncpg.connect(DB_URL) as conn:

await conn.execute(

"UPDATE customers SET risk_score = $1 WHERE id = $2",

score, customer_id

)The agent generates TypeScript that orchestrates Python tools and MCP servers:

// Parallel execution across Python and MCP

const [prediction, customer] = await Promise.all([

ml.predict_churn("cust_12345"),

stripe.get_customer("cust_12345")

]);

if (prediction.churn_probability > 0.8) {

await data.update_risk_score(customer.id, prediction.churn_probability);

await zendesk.create_ticket({

subject: "High churn risk customer",

customer: customer.email,

priority: "high"

});

}Single execution cycle. Type-safe orchestration. Tools in their native languages.

Open Source, Local-First

pctx and pctx-py are completely free and open source:

- No API keys

- No cloud dependencies

- No usage limits

- 100% local execution

The code server runs on your machine. Your tools execute locally. Your data never leaves your infrastructure.

Beyond Python: Multi-Language Architecture

pctx represents the first Code Mode architecture designed for multi-language execution from the ground up. The websocket callback protocol that enables Python tools isn't Python-specific—it's a language-agnostic contract between the code server and tool runtimes. We're building SDKs for Go, Rust, and other languages using the same protocol.

Unlike cloud-based Code Mode implementations locked to a single vendor's sandbox, pctx runs 100% locally on your infrastructure. As new language SDKs arrive, you'll be able to mix Python ML tools, Go infrastructure services, Rust performance-critical components, and MCP servers in the same Code Mode execution—all with the same token efficiency and type safety, all running on your own machines.

Get Started

- GitHub: github.com/portofcontext/pctx

- Python SDK: github.com/portofcontext/pctx/tree/main/pctx-py

- Documentation: pctx.readthedocs.io

About Port of Context: Port of Context builds infrastructure for production agentic AI, enabling secure and efficient connections between agents, tools, and data across language boundaries.